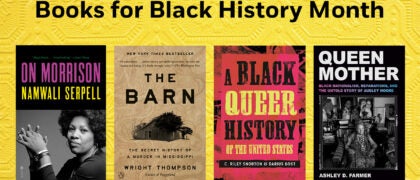

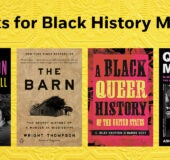

Books for Black History Month

Join Penguin Random House Education in celebrating the contributions of Black authors. In honor of Black History Month this February, we are highlighting essential fiction and nonfiction to be shared and discussed by students and educators this month and beyond. Find a full list of titles here.