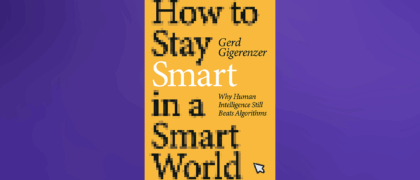

Technological solutionism is the belief that every societal problem is a “bug” that needs a “fix” through an algorithm. Technological paternalism is its natural consequence, government by algorithms. It doesn’t need to peddle the fiction of a superintelligence; it instead expects us to accept that corporations and governments record where we are, what we are doing, and with whom, minute by minute, and also to trust that these records will make the world a better place. As Google’s former CEO Eric Schmidt explains, “The goal is to enable Google users to be able to ask the question such as ‘What shall I do tomorrow’ and ‘What job shall I take?’”23 Quite a few popular writers instigate our awe of technological paternalism by telling stories that are, at best, economical with the truth.24 More surprisingly, even some influential researchers see no limits to what AI can do, arguing that the human brain is merely an inferior computer and that we should replace humans with algorithms whenever possible.25 AI will tell us what to do, and we should listen and follow. We just need to wait a bit until AI gets smarter. Oddly,

the message is never that people need to become smarter as well.

I have written this book to enable people to gain a realistic appreciation of what AI can do and how it is used to influence us. We do not need more paternalism; we’ve had more than our share in the past centuries. But nor do we need technophobic panic, which is revived with every breakthrough technology. When trains were invented, doctors warned that passengers would die from suffocation.26 When radio became widely available, the concern was that listening too much would harm children because they need repose, not jazz.27 Instead of fright or hype, the digital world needs better-informed and healthily critical citizens who want to keep control of their lives in their own hands.

23. Daniel and Palmer, “Google’s Goal.”

24. Overstated claims about algorithms without supporting evidence can be found, for instance, in Harari,

Homo Deus. I provide examples in chapter 11.

25. See the spectrum of opinions in Brockman,

Possible Minds. Also, Kahneman (“Comment,” 609) poses the question whether AI can eventually do whatever people can do: “Will there be anything that is reserved for human beings? Frankly, I don’t see any reason to set limits on what AI can do.” And: “You should replace humans by algorithms whenever possible” (610).

26. Gigerenzer,

Risk Savvy.

27. On fear cycles, see Orben, “Sisyphean Cycle.”

Copyright © 2025 by Gerd Gigerenzer. All rights reserved. No part of this excerpt may be reproduced or reprinted without permission in writing from the publisher.